At the last Data Potluck, consultant and writer Q Ethan McCallum explained how to put Hadoop to work, and how to use Elastic MapReduce (EMR), the hosted Hadoop solution provided by Amazon Web Services.

What is Hadoop?

Hadoop is used to power large scale data analytics and processes. You’ve probably performed data analytics on a small scale without even knowing it. For example, when you look up directions to a new restaurant the map program you’re using has to calculate how to get you from Point A to Point B in the quickest way possible. Depending on your device, it can take some time.

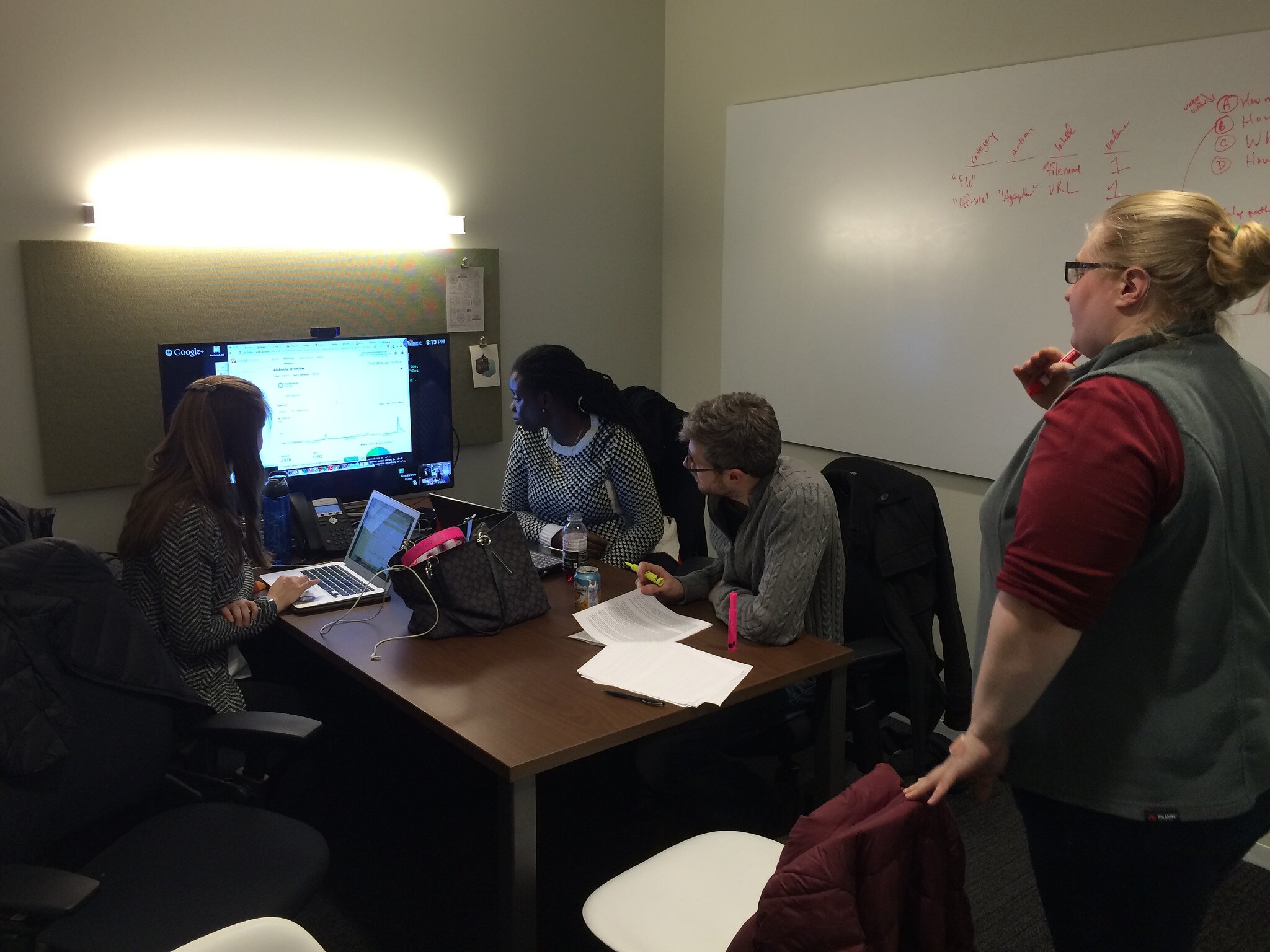

Photo by Alexander Torrenegra

Hadoop can handle calculations that are enormously more complex. For example, if you’ve ever scanned a document in order to be able to glean the text from it you know this process can take some time. The New York Times used Hadoop to extract 70 years worth of newspapers in less than 24 hours.

Hadoop accomplishes this by breaking up the work into many smaller parts. So instead of trying to perform OCR on 435 newspapers – Hadoop can create several works to perform OCR on only handful of newspapers. Hadoop shines when it comes to doing several independent tasks at once.

This also makes Hadoop scalable so that it can grow with you. If you need more power or more storage, you can simply add on more servers.

The Resiliency of Hadoop

Hadoop operates as a cluster of servers. This means that if one server breaks or has an issue, Hadoop can simply shift the work to another server. Hadoop also protects against loss of data by duplicating the data at least three times across the entire cluster.

Drawbacks of Hadoop

The downside to Hadoop is that it can be an expensive system to set up. While Hadoop is open source, the hardware infrastructure required to run Hadoop can be a significant investment. Hadoop requires multiple servers in addition to hiring people to maintain the servers.

As an example, the Disney Corporation uses Hadoop to run their data analysis. Disney spent $500,000 for their Hadoop Cluster. McCallum estimates that it would cost about $150,000 to purchase a decent sized Hadoop cluster.

The question that potential customers have to ask is will they need this type of data analytic power on a daily basis – or is this something they only need for one or two projects every so often?

Amazon Elastic Mapreduce

If you only need Hadoop’s power for the occasional project, you can actually rent a Hadoop cluster from Amazon much like you would a AWS server. You can rent a small cluster for 0.075 cents per minute. (A small job would cost about $3.)

This also makes sense because Amazon takes care of all the maintenance on the servers so that you wouldn’t need to hire an entire staff to run the cluster

About the speaker:

Q Ethan McCallum (@qethanm) works as a professional-services consultant, with a focus on strategic matters around data and technology. He is especially interested in helping companies to build and shape their internal analytics practice.

Q’s speaking engagements include conferences, meetups, and training events. He is also engaged in a number of projects, ranging from open-government/civic-data collaborations to open-source software tools. His published work includes Business Models for the Data Economy, Parallel R: Data Analysis in the Distributed World, and Bad Data Handbok: Mapping the World of Data Problems. He is currently working on a new book on time series analysis, and another on building analytics shops (Making Analytics Work).

About Data Potluck

Data Potluck is a monthly meetup that connects data analytics with the non-profit world and is held at the Chicago Community Trust. You can join their Meetup Page to get information on the next event.