City of Chicago Chief Data Officer Tom Schenk Jr spoke at last week’s Chi Hack Night to talk about their new system to predict the riskiest restaurants in order to prioritize food inspections – and has found a way to find critical violations seven days faster.

City of Chicago Chief Data Officer Tom Schenk Jr spoke at last week’s Chi Hack Night to talk about their new system to predict the riskiest restaurants in order to prioritize food inspections – and has found a way to find critical violations seven days faster.

Below, we’ve put up the slides from their presentation as well as the highlight video:

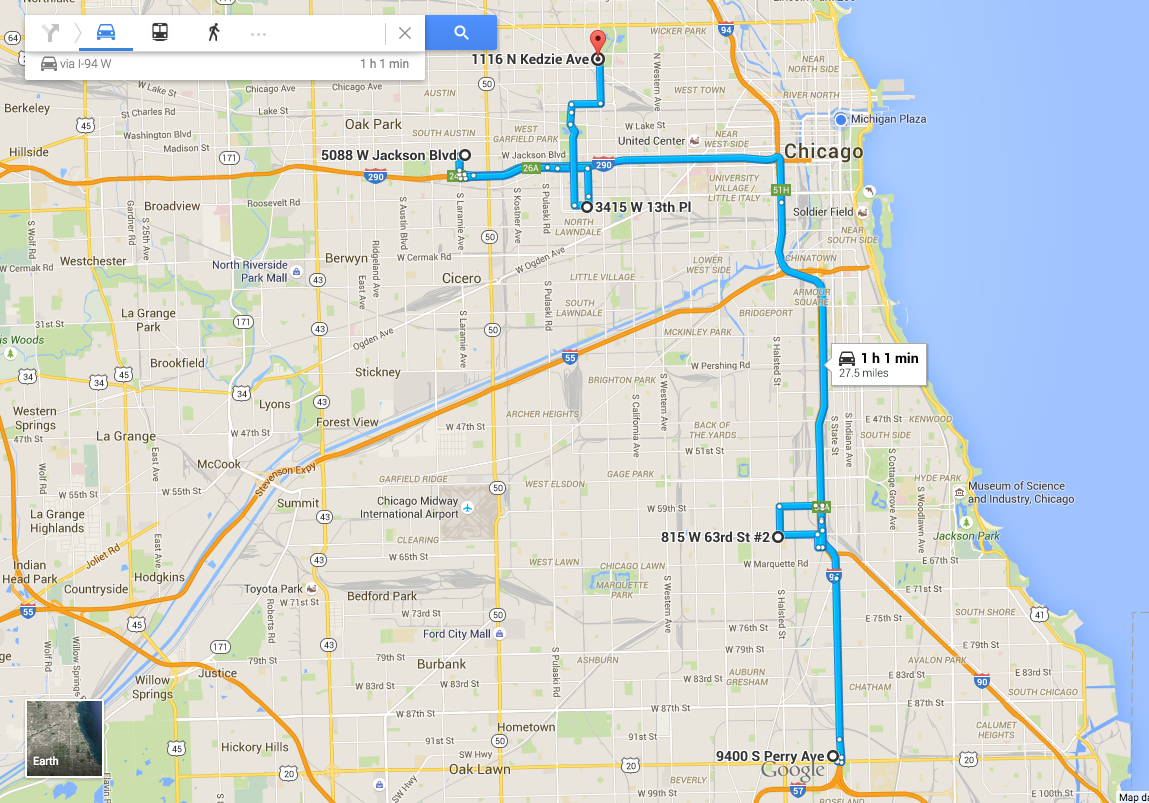

The problem with the way that most cities conduct food inspections is that by law they have to inspect all of them. However, the number of restaurants far outweigh the number of inspectors. In Chicago, there’s one inspector for every 470 restaurants. Since they have to inspect them all, the normal way of doing this is random inspections. However, the team knew that the residents wouldn’t get foodborne illness at random restaurants – they would get sick from those few restaurants who don’t follow all the rules.

The Department of Innovation and Technology partnered with the Chicago Department of Public Health and staff from Allstate Insurance to see if they could use analytics predict which restaurants would have critical violations. (Side note: It’s a brilliant move on the part of the City and the Allstate to contribute volunteer hours using something that actuaries specialize in.) Some of the data sets used to make these determinations were:

- Establishments that had previous critical or serious violations

- Three-day average high temperature (Not on the portal)

- Risk level of establishment as determined by CDPH

- Location of establishment

- Nearby garbage and sanitation complaints

- The type of facility being inspected

- Nearby burglaries

- Whether the establishment has a tobacco license or has an incidental alcohol consumption license

- Length of time since last inspection

- The length of time the establishment has been operating

All of the data, with the exception of the weather and the names of the individual health inspector, come directly from the city’s data portal. (Which builds on the city’s extensive work in opening up all this data in the first place.) When factoring all of these items together, the research team was able to provide a likelihood of critical violations for each establishment, which was developed to prioritize which ones should be inspected first.

This is the application – it’s a list. No fancy map, no frills, just solid info for @ChiPublicHealth #chihacknight pic.twitter.com/dXZs9zlMOS — Smart Chicago (@SmartChicago) May 20, 2015

In order to test the system, they conducted a double-blind study over a sixty day period to ensure the model was correct.

The system has gotten rave reviews and coverage from a number of publications and entities including Harvard University, Governing Magazine, and WBEZ’s Afternoon Shift.

Aside from the important aspect of less people getting sick from foodborne illness in the City of Chicago, there is another very important aspect of this work that has national impact. The entire project is open source and reproducible from end to end. We’re not just talking about the code being thrown on GitHub. (Although, it is on the city’s GitHub account.) The methodology used to make the calculations is also open source, well documented, and provides a training data set so that other data scientists can try to replicate the results. No other city has released their analytic models before this release. The Department of Innovation and Technology is openly inviting other data scientists to fork their model and attempt to improve upon it.

@CivicWhitaker Exactly, make a fool of me! (Just make sure that it’s rigorous, reproducible, and please sign the CLA) #MightRegretThisTweet

— Geneorama (@Geneorama) May 20, 2015

The City of Chicago accepts pull requests as long as you agree to their contributor license agreement.

Having the project be open source and reproducible from end to end also means that this projects is deployable to other cities that also have their data at the ready. (Which, for cities that aren’t, the City’s also made their OpenETL toolkit available as well.)

The Department of Innovation and Technology has a history of opening up their work and each piece they’ve released (from their data dictionary to scripts that download Socrata datasets into R data frames) builds on the other.

In time, we may not only see Chicago using data science to improve their cities – but other cities building off the Chicago model to do so as well.

You can find out more about the project by checking out the project page here.